GenAI@BC Libraries: Added Value

Second in a blog series by members of the BC Libraries GenAI Task Force

It’s becoming clearer that generative AI doesn’t add value universally; it adds value in limited applications and contexts, using particular AI tools adapted to particular circumstances. Anyone turning to GenAI to help with a task should always ask: will this tool add value in this context, for my goals? As we gain experience with it, it ought to become clearer when it can be helpful.

Here are a few examples and some reflections.

Example 1, email

At a staff workshop a colleague recently demonstrated how Copilot could help write and adapt a set of emails to send to multiple audiences with different stakes in an announcement. After demonstrating several iterations of prompts, the presenter made two good points: one, that the writer has to be capable of judging text output and re-prompting Copilot to revise unsatisfactory results, and two, that this was a case where the point of using GenAI was to save time on ancillary–not core–work.

In this case, both the nature of the goal (increased efficiency with ancillary tasks) and the knowledge and skill of the user set some tight constraints on GenAI’s added value. There’s a fairly narrow range of skill that would both be sufficient to assess output and also benefit from saving time on writing emails: it’s easy to imagine that a skilled writer could create a template email and several versions intended for different audiences in only a little more time than it would take to craft prompts, evaluate results, and re-prompt to revise. But the converse can also be true when writing for different audiences isn’t a person’s forte.

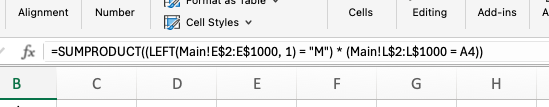

Example 2, Excel

Another colleague–a power-user of MS Excel for data analysis–demonstrated some queries directing MS Copilot to output some complex Excel functions. He explained that he likely could have built the functions himself by referring to online sources, but it might have taken many hours instead of one.

In a task like this, the constraints on GenAI adding value are a little broader: a person can be experienced enough with Excel functions to recognize when a generated function will likely work, but inexperienced enough that building the function would be troublesome and time-consuming.

Using CoPilot for generating Excel functions (or various kinds of code) has the added benefit that CoPilot provides an explanation about how the function works, which could help a person with less expertise identify whether it would achieve what they were intending. (CoPilot specifically has been shown to increase coding efficiency in the workplace.)

In both cases the primary added value is saving time, but that only works when the user has sufficient existing experience, knowledge, and skill both to frame queries and to recognize when output needs more work.

All this is to say that GenAI can’t really help a person leapfrog past acquiring skills and knowledge the slower way: through experience and instruction. When it provides explanations of its output (or a user prompts it to provide explanations), it could shorten the learning process by providing what librarians call “point of need” instruction, but it can’t circumvent it. Learning takes time and attention that can’t be outsourced.

Using GenAI to skip too far past competence puts one in the unenviable position of accepting the output on trust alone. You might save time in the short run, but flawed output might come back to haunt you: emails might sound “off” to their recipients, or Excel code might either not work or–worse–appear to work but bake unrecognized mistakes into the analysis.

Not only might someone with insufficient experience not know how to judge output; they might not be sufficiently suspicious of output to be critical in the first place. People often compare the advent of AI to the advent of calculators: they augmented our abilities in a similar way, and there were similar criticisms about dependency and learning. But some concerns, it turns out, were valid for calculators: people trust calculator results even when they shouldn’t.

Emails are one thing, data analysis another, but using AI in medicine to alleviate overloaded general practitioners takes the necessity for accuracy to a new level. Medical practitioners using GenAI diagnostically would need to:

- apply it only in the narrow contexts in which the AI was trained

- carefully evaluate output, and

- invite physician oversight

The risk in all of this is automation complacency: users becoming reliant on decision aids that they perceive as dominant to their own judgement, a bigger risk for a subject-matter novice than for someone with more expertise. In the words of Shelley Palmer, a prolific writer on GenAI, it’s a skills amplifier, not a skills democratizer.